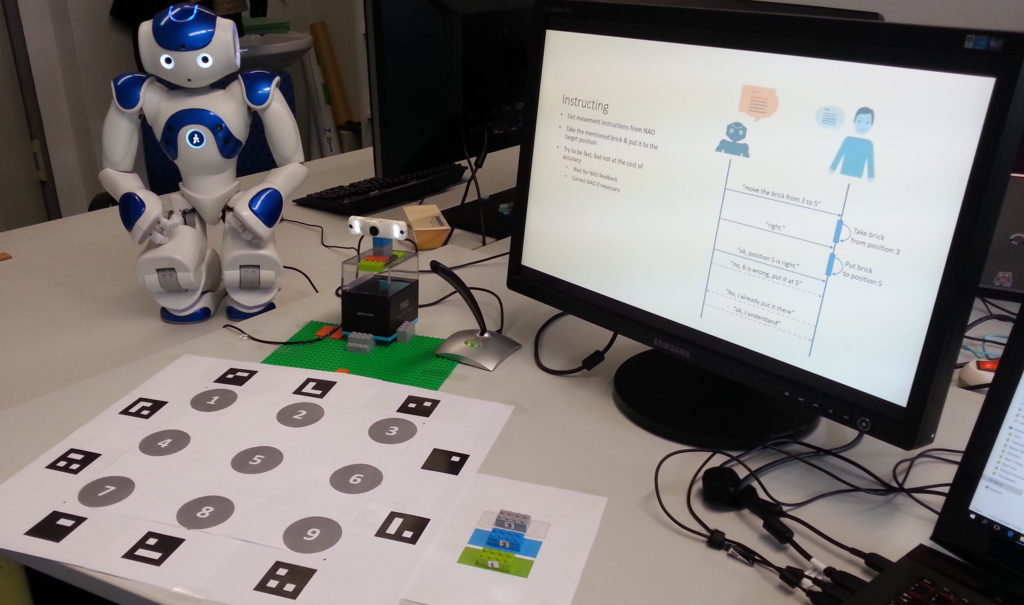

Multimodal Human-robot Interaction setup with NAO

Gaze is known to be a dominant modality for conveying spatial information, and it has been used for grounding in human-robot dialogues. In this work, we present the prototype of a gaze-supported multi-modal dialogue system that enhances two core tasks in human-robot collaboration: