Recently, we published a prototype for gaze-guided object classification at UbiComp conference 2016. This topic also raised interest of Pupil Labs, the manufacturer of the applied eye tracking device.

Gaze-guided Object Classification Demo

Abstract

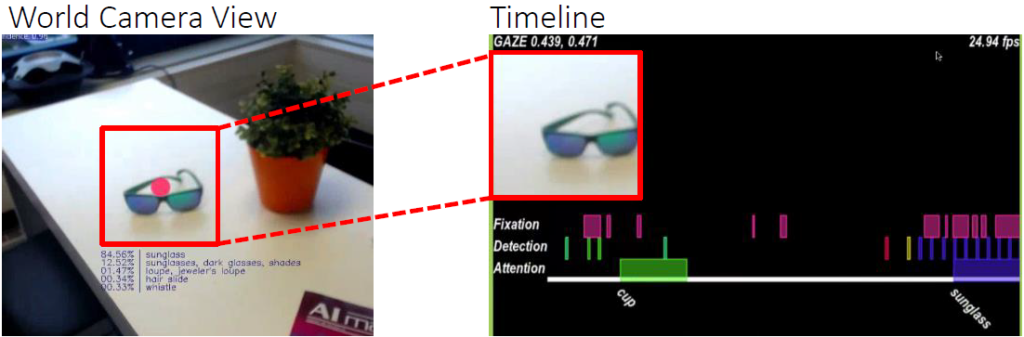

Recent advances in eye tracking technologies opened the way to design novel attention-based user interfaces. This is promising for pro-active and assistive technologies for cyber-physical systems in the domains of, e.g., healthcare and industry 4.0. Prior approaches to recognize a user’s attention are usually limited to the raw gaze signal or sensors in instrumented environments. We propose a system that (1) incorporates the gaze signal and the egocentric camera of the eye tracker to identify the objects the user focuses at; (2) employs object classification based on deep learning which we recompiled for our purposes on a GPU-based image classification server; (3) detects whether the user actually draws attention to that object; and (4) combines these modules for constructing episodic memories of egocentric events in real-time.

Video

Reference