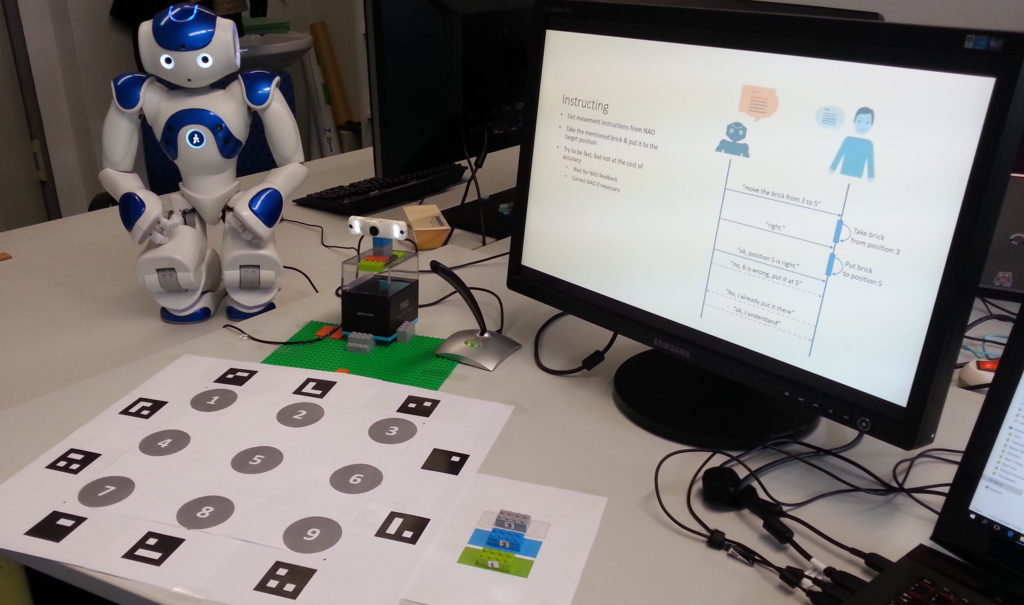

Multimodal Human-robot Interaction setup with NAO

Gaze is known to be a dominant modality for conveying spatial information, and it has been used for grounding in human-robot dialogues. In this work, we present the prototype of a gaze-supported multi-modal dialogue system that enhances two core tasks in human-robot collaboration:

- our robot is able to learn new objects and their location from user instructions involving gaze, and

- it can instruct the user to move objects and passively track this movement by interpreting the user’s gaze.

We performed a user study to investigate the impact of different eye trackers on user performance. In particular, we compare a head-worn device and an RGB-based remote eye tracker. Our results show that the head-mounted eye tracker outperforms the remote device in terms of task completion time and the required number of utterances due to its higher precision.

Video

Reference

Evaluating Remote and Head-worn Eye Trackers in Multi-modal Speech-based HRI. In: Mutlu, Bilge; Tscheligi, Manfred; Weiss, Astrid; Young, James E. (Ed.): Companion of the 2017 ACM/IEEE International Conference on Human-Robot Interaction, HRI 2017, Vienna, Austria, March 6-9, 2017, pp. 79–80, ACM, 2017.